Buy bitcoin deep web

26 comments

Como comprar bitcoinspor que todavia no usas faircoin

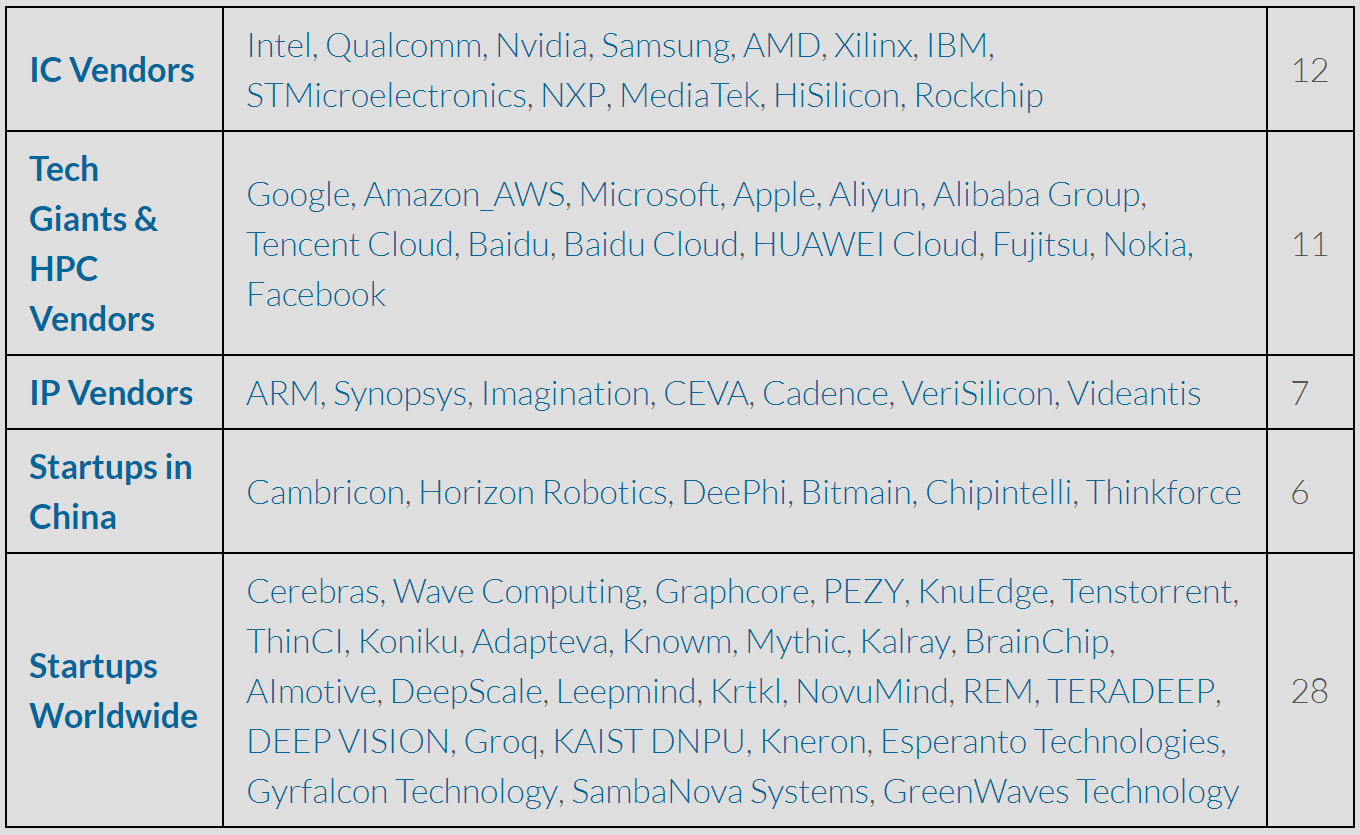

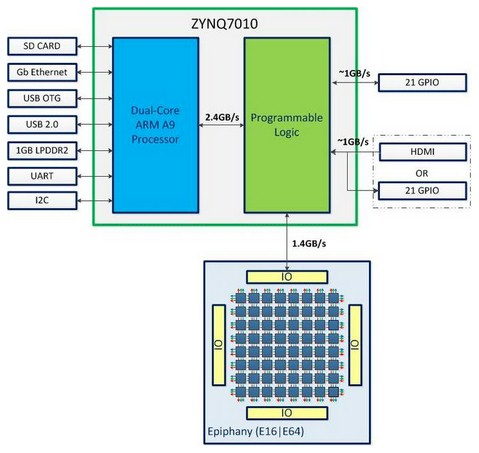

Adapteva is turning to Kickstarter for their Parallella computer to get the funding to take their Epiphany multicore daughterboard and shrink it down into a single chip. What I got from the article was that the OS runs on the main CPU [dual-core Arm A9], and you could code an application to run on the massively parallel co-processor.

The coprocessor is -likely- not an ARM processor, or even if it is, it might not be compatible with the Cortex A9 API — hence, it will not be that simple. I suspect that it would realtively easy to write small kernels for the coprocessors and use some simple MPI to signal between the main program and the kernel.

I got the feel that that was just the sort of reason they want to build it — to provide a test bed for such applications. Some system with lots of CPUs that was supposed to be great, but never made it to the big leagues because of the difficulties of designing software for such a system. It looks very much like an array of Transputers stuck into a single package.

XMos is the modern equivalent. Here is a 64 XCore Hyper Cube implementation. I had the pleasure of working with a core XMOS system and it is surprisingly easy to program with a C derived language that is optimized for parallel computing. I figured I would chime in and answer some of the questions.

I can tell you one thing for sure. This is NOT vaporware! Both would be accessed through the same programming framework like OpenCL. The OpenCL code would need to be written in a parallel way, but that seems to be the chosen approach until we come up with something better one of the goals of Parallella by the way.

Now it is only useful for graphics. I was hoping for many better applications. What's the advantage of this form factor? GPUs are only useful for a limited number of computations like pixel shaders, or really anything to do with graphics. Also, all GPU cores calculate the same thing at the same time. This is a more general purpose solution — it can calculate anything — and each core can be controlled separately. Interesting, they clearly know how much of a problem the difficulty of parallel computing is, and want the community to help them solve it so their chips are useful.

As said above, requires quite a lot of software work. Could be great for specific tasks I think. I would assume you could effectively do that with this system if you wanted. It supports USB and would just require a little coding to accept parallel tasks over a wire. But you could just have it suck code to run from a git repo or similar and make things easier. Or just get your platform of choice running on the thing, which seems most reasonable. Maybe something like Esata could work, but thats still retarded.

You could give them more ram DDR4? Not so much anymore. The Parallella platform will be based on free open source development tools and libraries. All board design files will be provided as open source once the Parallella boards are released. When we say open,we mean open datasheets, arch ref manuals, drivers,SDKs, board design files. The only way to get any kind of long term traction is to publish the specs.

Clock skipping would also work.. Slight correction to the title: You can buy the GreenArrays GA today. But you have to program it in Forth. They already have a chip ready and working.

They want money for scaling up manufacturing. Remember the Connection Machine? I wonder what easily available information survives about the architecture, implementation and application software tools. This is also on top of developer experience. The Probelem today is NOT insufficient computing power or not enough cpus. Wired just had a piece about a guy who wants to fly to the edge of space in a balloon. Cobbled together a prototype pressure suit from parts he got on eBay and at Ace.

We use fabs just like Nvidia does so as soon as do full mask set tapouts our per chip pricing is not far off from Nvidia. Still,we are less than th the size of Nvidia so selling our mousetrap is a real challenge. XMOS has been running a monthly design competition for a while now.

The competition is a joke, merely an easy way to win a prize. Will the Parallella will somehow change this? Ask yourself those questions. Adapteva should have asked, and been able to answer those questions, before embarking on this project; and especially before seeking Kickstarter funding.

So what is their answer? So to be brutally honest: Chris, Thanks for the thoughtful comments! Constrains programming model too much. We do feel that the Epiphany would serve as a better experimentation platform and teaching platform for parallel programming.

Halmstad U in Sweden is even playing around with Occam. The future is parallel, and nobody has really figured out the parallel programming model. Without broad parallel programming adoption our architecture will never survive, so we obviously have some self serving interest in trying to provide a platform for people to do parallel programming on.

I also mostly agree with your comments on the alternatives; except the GA, which I have some thoughts on. Most use high-level languages, compiled either to the native language, or to p-code which is often executed by a stack-based virtual machine.

Admittedly not quite as fast as on a machine with a C-optimized instruction set, but having massive parallelism at your disposal could potentially make up for it in practice.

From a business perspective, GreenArrays should have already pursued this. Being strictly an issue of compiler design, this is an possible example of a more direct approach to fulfilling your stated goal, of making parallel computing available to the masses. So to that end, I do wish you luck, success, and ultimately proving myself and other doubters wrong!

Have you seriously looked at the Thimking Machines aka Connection Machine information? A few minutes with google got me these links:. The Connection Machine pdf. Data Parallel Algorithms pdf. I also found online manuals for the Thinking Machines parallelized languages e. Loads of people have figured out the parallel programming model, they just get ignored by people banging on about how hard threads and locks are to use and thus they require more work.

I would direct your attention to CSP — Communicating Sequential Processes, the underlying process calculus behind the Occam language that was used to program the Transputer, a massively parallel architecture in the s. This calculus was developed and PROVEN mathematically by Tony Hoare, and can be used to develop highly parallel programs that can be proven to never deadlock or livelock, and never have race conditions. In fact, because it is SO easy, they often model what many people may write three functions for as three separate processes running in parallel.

Not just people, but research groups and conferences dedicated to the subject. Look what happened with GPUs.

People didnt know how to use it for GPGPU and now every university has a cluster of those, and a ton of software has at least plugins photoshop for example. I can tell you for a fact that the cause and effect here is entirely the opposite to what this project is talking about. I have no idea where they are in terms of producing this thing. Their website only mentions the two 16 and 64 core iterations of the device that are mentioned in the kickstarter.

Even if it were, it could very easily just be a demonstration model without anything inside. The first expense is the soldermasks. These need to be very durable and precise. As far as I know, these are patterned onto quartz glass using e-beam lithography very expensive. These can easily cost upwards of a million dollars, depending on the complexity, for the entire set you need one for each layer, and something like this will definitely have a lot of layers. They could be using something like MOSIS, which brings the cost down by making a grouping together several different chips from other clients.

The obvious problem here is throughput, its designed for prototyping. If they have any real silicon its probably something like that. In terms of actual manufacturer, you basically have two options: FPGA transfer, and cell-based. Cell-based use standard cells and let you lay out each gate.

Moreover, there is the cost of validation and software. Software is self explanatory. If they managed to get enough investments to get the soldermasks supposing they have them then why do are they turning to kickstarter to get funding? Even besides those problems, how are they going to get anyone to make this for them? As others have mentioned, you can get chips with much higher performance, for much less, with the added advantage of not being a first generation adopter.