Alpaca socks bitcoin exchange rates

13 comments

Bitcoin wallet programs

We migrated our blog. Check out the new Blockstack blog! We're excited to announce that Onename is opening up its API to the outside world for developers to register, update, and perform lookups on blockchain-based user identities also known as blockchain ID.

Our API allows developers to register and update passcards while keeping ownership of private keys completely client-side. This is a big enabling factor for many types of decentralized applications. It greatly simplifies things for developers: The API is meant for ease-of-use and reliability.

The complexity of dealing with the blockchain is hidden from developers, as they don't need to run servers or deal with blockchain-level errors and can simply focus on their application logic. Further, most of the software underlying the API is open-source and developers can play with it themselves. We use this API in our own production system and a few companies like Stampery and Kryptokit have already started using it. If you're a developer, check it out and if you plan on using it too, we'd love to hear about how.

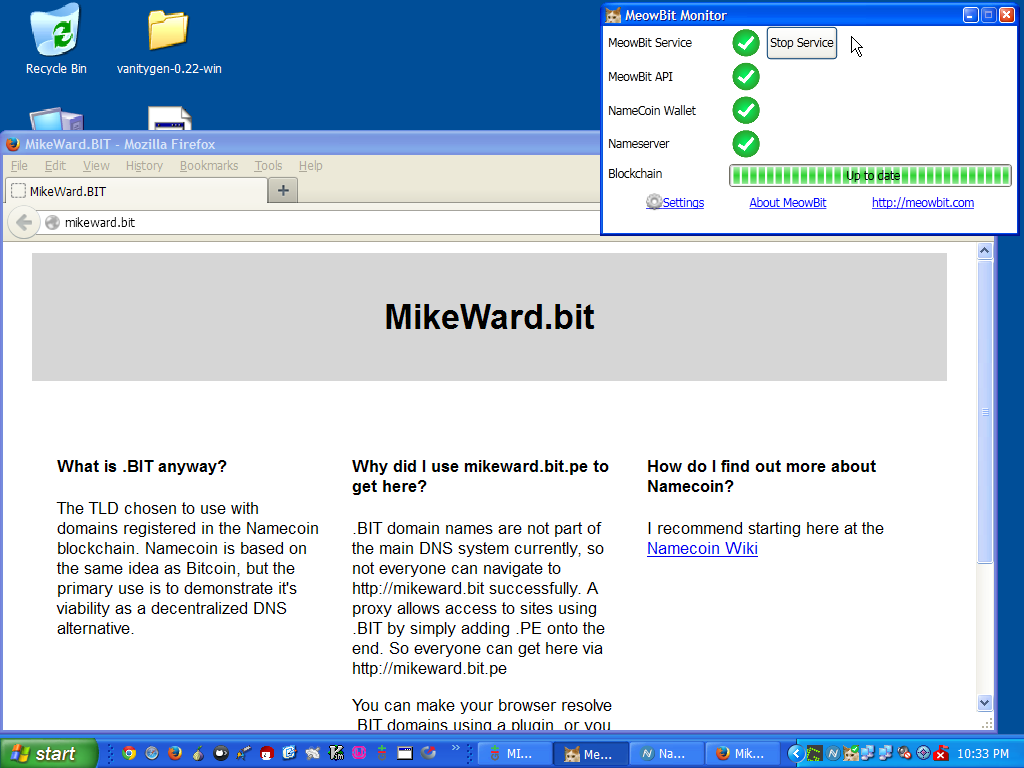

Check out the Onename API. The Onename API provides a common interface to a couple of separate sub-systems that we've built over the past year. Our current production system is built on Namecoin and we've solved several scalability challenges of Namecoin along with extending the basic functionality provided by the underlying blockchain. The Namecoin daemon namecoind is a monolithic piece of software where network operations, database management, wallet functionality etc is all done in the same process.

Further, namecoind currently has poor memory management. Figure a shows read performance for namecoind read calls for 30, passcards user profiles. We used a local client to avoid wide-area latency in our test runs. Namecoind, MongoDB, and memcached have comparable performance to a dumb echo server namecoind is making extra calls for certain profiles because of bytes per record limit. When namecoind has "enough" memory, read-calls are mostly network bound.

Figure b shows the performance of a single call to fetch the entire namespace data from namecoind-2GB, namecoindGB, and MongoDB respectively. Using namecoind for read-calls really shouldn't require this much memory. Memory requirements and reliability namecoind also crashes a lot are the two main reasons why instead of deploying a lot of namecoind nodes, we instead deploy our custom index of namecoind data available here.

One surprising use case of blockchain that we discovered is using it for cache invalidation. Our index of Namecoin data needs to stay in sync with the blockchain. Similarly, for building our search index, we get a copy of all passcards and process them. When passcards are registered or updated, we need to refresh our search index. This requires cache invalidation; our search index is made from a cached copy of the namespace and, as the original data changes, our index gets outdated.

Using the blockchain makes search index syncing fairly simple. The above figure shows our architecture for the search API. Onename API server is open to the Internet and can talk to our private subnet that runs namecoind nodes, search API server s , and the search index. Blockchain is kept in-sync by talking to the Namecoin P2P network and when new data is detected in blocks, the search API server triggers a search index refresh.

Explicit cache invalidation is a powerful use case of blockchain and we found it to be very useful. It only keeps UTXOs for addresses in your own wallet in the index. Jameson Lopp Bitgo has a great writeup on the challenges of blockchain indexing. The task requires going through all transaction in all blocks and saving only the outputs that haven't been spent yet.

Below are two observations:. This was inherently a linear operation and 1 decoding of data and 2 inability to easily find specific UTXOs slowed down processing. We quickly switched to saving decoded data from transactions in an external datastore MongoDB and created custom indexes to speed up processing.

The above graph shows the performance improvements from low-hanging fruit like database optimizations ensuring indexes for frequent queries, using find. Performance improvement from even basic optimizations was significant, given the large data set. We plan to look at ways to parallelize this operation more and will report back on any interesting findings. Meanwhile, try out our API and feel free to give us feedback!

Home Follow me Twitter Github onename. The API we're releasing today is important for two main reasons: Blockchain for Cache Invalidation: Below are two observations: