We have reduced support for legacy browsers.

5 stars based on

58 reviews

The following is a proposed Wikimedia document. References or links to this page should not describe it as supported, adopted, common, or effective.

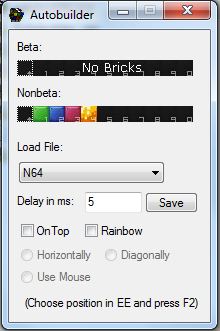

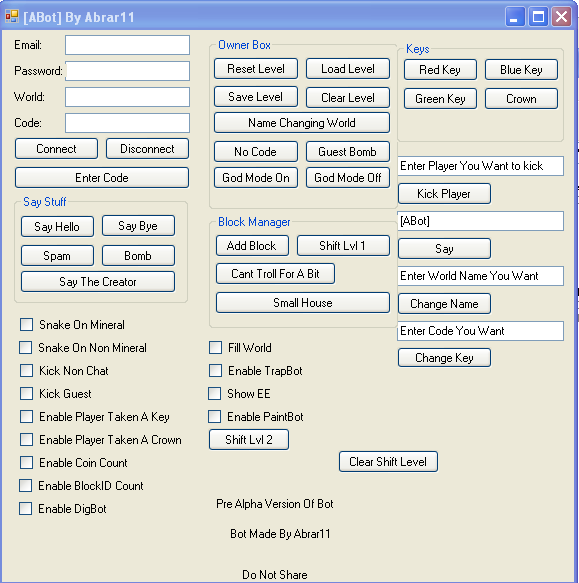

The everybody edits bot maker programs may still be in development, under discussionor in the process of gathering consensus for adoption which is not determined by counting votes. I would like to make the following proposal for a Policy on overuse of bots in Wikipediasin light of recent perceived problems by overuse of bots. It should reflect that limited use of bots is supported. However, it must define what is the overuse of bots, and give solutions to that problem.

Currently, the scope of this proposal only extends to the Wikipedia projects. However, if it is adopted and seems to be successful, it may be used as a model for other Wikimedia projects as well. First I would like to say that bots can be useful tools, and I am not against their use. They can make tedious jobs easier, update information and leverage the work a Wikipedia does.

Pretty much every Wikipedia uses bots to some extent. However, I propose that overuse of bots is harmful to the Wikipedia itself, as well as the wider body of Wikipedias. In the everybody edits bot maker programs analysis, I do not mean to pick on the Volapuk and Lombard Wikipedias, but they are the most current obvious examples we have. However, there are probably others everybody edits bot maker programs there that fit the model of a Wikipedia that has relied too heavily on bots.

I am defining a "human article" as an article which has been initiated by a human, and a "bot article" as an everybody edits bot maker programs initiated by a bot.

Although this may be generalizing a bit, I've chosen this definition for a few reasons. First, it's simple and straightforward, thus it is a parameter which could be easily evaluated. Additionally, I am also trying to avoid a "microedit brigade" scenario. I could see where a bot creates a bunch of articles, and a human or a team of humans makes a small inconsequential edit on each of them to make them "touched by a human". By "microedit" I mean just a little piece of information-- a link, template, category, etc.

It would be difficult to determine if an edit is "inconsequential" or not, so that is a gray area which I would like to avoid. I realize it's everybody edits bot maker programs a perfect system. There will be some terrific articles initiated by bots, and some lousy articles initiated by humans.

There will be some articles initiated by humans, and completely overwritten by bots, and some bot articles completely overwritten by humans. However, I would consider most of those scenarios to be outliers. I am speaking about generalities here, and such details are not that important to the end-result. I would suggest that would be too harsh of a limit.

Besides, to limit one Wikipedia's bot use and not others seems to be unfair. I am proposing a 3: If your Wikipedia has articles initiated by humans, you are entitled to additional articles coming from bots. I think even this may be too many bot articles, but at least it is a limit.

And if it seems too lax, we can always change the ratio later. By proposing this ratio, please do not misunderstand. It everybody edits bot maker programs not suggested that Wikipedias should have a 3: If you are in a Wikipedia with a small bot-to-human ratio, great!

Remember, a human-created article is almost always superior to the bot-created one. The remedies which have been proposed so far have been closure of the Wikipedia, cutting all of the bot articles, and moving the affected Wikipedia to the Incubator.

All of these remedies seem excessively harsh to me, and seem punitive. Punishment is not the answer, I don't think. Our best bet is to warn of a violation, then allow a reasonable amount of time to self-correct, then outside corrective action should be taken. Penalties against a Wikipedia should only be punitive in extreme cases of ignoring the established policy.

Once a Wikipedia is brought back into compliance, it is allowed to undelete articles in the same 3: How are we to determine which editors are bots? Are all editors that doesn't have a bot flag, doesn't have 'bot' in the user name and doesn't tell it is a bot on the user page, non-bots?

Or are we going to for instance set a limit of edits per day or hour, week, monthand treat those editors which are making more edits as bots? Everybody edits bot maker programs should a Wikipedia be warned, and who should do the policing?

Is this a subject that should be brought up here or in the individual Wikipedia, or both? What should we do about Wikipedias that are currently not in compliance? I welcome your comments and suggestions on this difficult issue. However, I have tried to be fair in constructing it. It is not fair to single out one Wikipedia when making this kind of proposal.

I would be curious to know if any other Wikipedias besides the Volapuk violate this proposal as it stands now. I would like to end by saying that I feel no ill-will toward the Volapuk or any other Wikipedia. In fact, I am an admin at the Esperanto Wikipedia, so I have a fondness for conlangs. However, I think the measures that I outline above are necessary to make the Volapuk more robust, and help to protect the image of all Wikipedias.

Additionally, it will help to keep the rampant oneupmanship in control. I ask that you try to keep a level head in the ensuing discussion. Thank you for your attention. Perhaps we also need to recognize the importance of prose in bot-generated articles. Rambotthe canonical example of an article-generating bot, demonstrated that everybody edits bot maker programs is feasible to generate full, readable articles using statistical data. It generated the U. Of course, the rambot articles are far from portable, which is everybody edits bot maker programs it's taken awhile for those articles to be incorporated into other language editions, and which is why updates to the statistical data in those articles need to be performed with human supervision e.

But I'm sure prose still reflects much better everybody edits bot maker programs Wikipedia than any amount of tabular data which you can find at countless other sites.

So perhaps we could adopt Erik Zachte's alternative criteria column F when evaluating whether a bot-generated page is a legitimate encyclopedia article:. First of all, a couple of comments about the proposal: And, now, here are the responses: Before you trigger-happy Volapukists shoot this down without even reading it, did everybody edits bot maker programs actually read the part where I asked the question of what to do with VO: WP if this passes?

This proposal is full of places where I say I'm not picking on VO: WP, but simply using them as examples of what I everybody edits bot maker programs as bad behavior.

Although I would like to see VO: WP everybody edits bot maker programs comply with this, it is not my intention to force you to anything by this proposal. I've considered the everybody edits bot maker programs that VO: WP could even be "grandfathered", in other words: People, do you realize that if you spent less time arguing everybody edits bot maker programs, and less time sending out ethically questionable emails campaigning for your cause, and less time arguing every nuance of every clause on every forum, bulletin board, and wiki-discussion page across the entire Web, less time trying to convince me to change my mind I'm guessing you would be in compliance in about articles.

How do you like that? What do you think about that? Just give an inch! Until now, I have not seen the Volapuk camp compromise one iota.

I have tried to support you, tried to find a middle ground, tried to respect your position. I get attacks, and double talk, disrespect, but no compromise. By not compromising at all, you show extremism and a lack of maturity.

It would be a big step if you were to make everybody edits bot maker programs proposal that showed you were willing to take some steps to address the concerns of the other side.

I do see some problems arising from human-only-maintained articles that get outdated over time when no-one cares to update them. I do not see any ethical problems. The motivation of people is nothing we should have to care about as long as it does not lead to wrong or pov Articles. My basic perspective is, what suits readers best. Quite often, I find well written articles by humans superior in quality to bot created ones, especially mass-created ones presenting only a limited amount of 'canonical' information.

Yet such an article is - usually - much more valuable than no information at all. Otherwise you're pretty much out of luck as I see it. Smaller, and most notably less ressourced, language communities are not everybody edits bot maker programs in a worse position already from 'normal life' discrimination, limited and expensive internet access, lack of people having a chance to spent their time adding to Wikipedias, etc.

This appears to me contrary to the goals of the wmf and most certainly contrary to the readers interest. One can believe that, missing articles are less inviting to be created than stub articles are to be expanded, if 'seen' by a reader who could contribute, especially inexperienced editors.

I'm uncertain about the ratio, though. Bot-created stubs are unlikely to keep readers entirely from becoming contributors. This may only be happening, if bots leave nothing to be desired -- an unlikely situation, and btw. Whether or not people see bot article counts as good or bad, is their everybody edits bot maker programs taste.

If people want them reflected in statistics, we should include them for clarity, if nothing else.