Lightning Network For Ethereum? ETH Surges as ‘Casper’ Approaches

5 stars based on

30 reviews

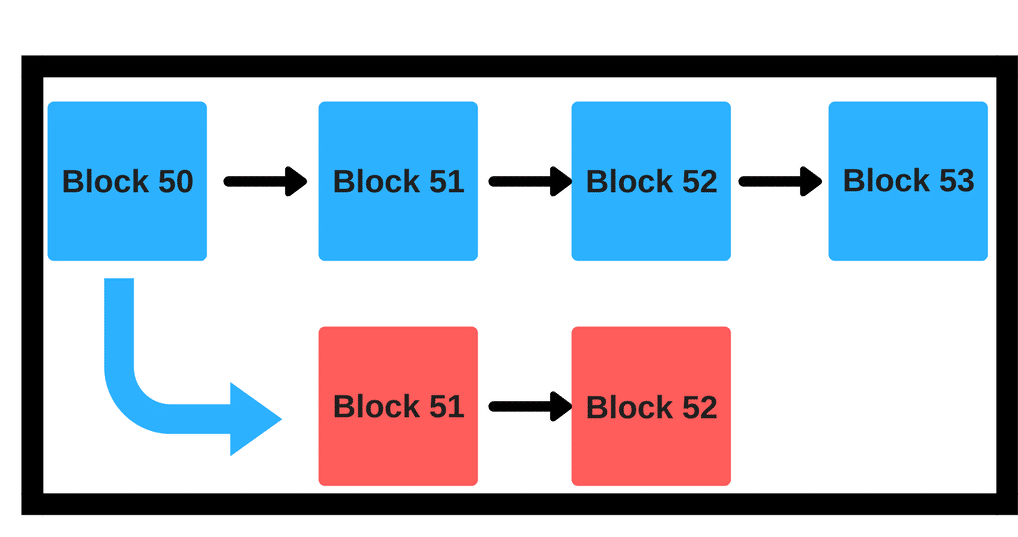

Hybrid Casper FFG uses proof of proof of stake ethereum casper to finalize blocks, but still leaves to proof of work the responsibility of proposing them. To reach full PoS, the task is thus to come up with a proof of stake block proposer. Here is a simple one, and the one that is currently used for the sharding validator manager contract:. This has the benefit of being extremely concise to implement and reason about, but has several flaws:. The first problem is out of scope of this post see here for an ingenious solution using linkable ring signatures.

To solve the second problem in isolation, the main challenge is developing a random sampling algorithm where each validator has a probability of being selected proportional to their deposit balance.

This can be solved using a binary tree structure, as implemented here. For the third problem, the easiest solution is to set the epoch length to equal the number of active validators, proof of stake ethereum casper select a permutation at the start of the epoch. Solving the second and third at the same time, however, is more challenging. There is a conflict between:. Proof of stake ethereum casper reconciling 1 and 3 requires each validator to have exactly one slot, but this contradicts fairness.

One can combine 1 and 2 with a hybrid algorithm; for example, one can imagine an algorithm that normally just randomly selects validators, but if it notices that there are too many not-yet-selected validators compared to the number of blocks left in the period it continues looking until it finds a not-yet-selected validator. One can achieve an even better result with an exponential backoff scheme: Achieving proof of stake ethereum casper requires two messages from every validator that must be processed by the blockchain, so the rest follows trivially.

There are two ends of the tradeoff that are obvious:. I believe that the optimal solution lies in the middle. Casper Proof of stake ethereum casper already does this, supporting:. But with full proof of stake, there is a binary divide: One could simply accept the redundancy, accepting how Casper FFG works, but then adding in a PoS block proposer, but we may be able to do better.

I believe that the ring signature scheme achieves this. The sampling is a permutation without repetitions. Regarding deposit sizes, my latest not too strongly held opinion is that fixed-size deposits beat variable-size deposits:.

So all in all I think the ring signature scheme has the right properties: Total proof of stake ethereum casper of validators: This period of 4 is only a little bit more than the number of validators 3so proof of stake ethereum casper logarithmic function is very efficient here, not to make the full period too long.

If you take a period of 6 or 7then there is a high probability the vast majority of validators will create at least a block. But on average they will. Concerning the other topic, I agree with a balance between a medium amount of nodes, a medium time to finality and a medium amount of overhead.

I think the first implementation of proof of stake ethereum casper POS should be as simple as possible to see how the network will react: It would be like in bitcoin validation, when you see the number of confirmations growing over time.

With POS, it would just be much faster. The problem with fixed size deposits is simple efficiency: To remove any time-based correlation across logs, we publicly shuffle the array using public entropy.

To achieve this over the long run, you could keep track of chance balances per validator. At the beginning of each epoch of N blocks you add the deposit amount of the validator to the chance balance. You draw the validators relative to the size in the chance balance.

If a validators chance balance is negative, this validator can not be chosen and has to wait for it to turn positive again. If after an epoch a validators chance balance is still positive, this balance is carried over to the next epoch in increase its chances. This off course depends on the distribution of the deposits. The more evenly distributed, the more blocks we need. Computation is done off chain.

The next validator posts proof along with his vote - array index. Btw, 64 bytes for address: With balance precision reduced by 2 bits 32 bytes would be enough for address 20 bytes: Then when a proposal is made, augment the signature by the ephemeral identity with a zk-proof for the deposit amount.

In a scheme with perfect fairness notice that private lookbehind is necessary for private lookahead. The above scheme achieves a nice efficiency gain, and only slight compromises on private lookbehind:. You could store sumOfPreviousBalances in a more fancy data structure, but that makes everything O log proof of stake ethereum casper and is basically my solution. This is subtracting approximations from each other in a way that can easily lead to destructive error amplification so I would not trust the specific number too much though.

A positive thing about these algorithms is that a single bad node can not slow down the system since there is no master and no timeout. Because of this, large clusters are not really practical. It aims to overcome the scaling problem by running multiple instances of consensus on each subgroup of nodes.

As an example, if you have a cluster of a nodes, you split it into 16 groups of 16 nodes, and then run 16 instances of asynchronous proof of stake ethereum casper in parallel, one consensus instance for each group.

Then you get 16 small blocks, that you provide as inputs for higher level consensus. You then run a high level instance consensus to order these block and create one large block.

In this way, we believe we can get to fast finality even for larger chains, as the finality time will be essentially proportional to a logarithm of the chain size. In addition, consensus becomes really a parallel thing where different subgroups of nodes work different sub-tasks in parallel.

I actually dislike these for cryptoeconomic reasons: When there are N parties sharing the responsibility in some way where each one only has small influence over the outcome, then it gets weirder.

I agree that fully asynchronous protocols may be a bit more difficult to debug and if something goes wrong attribution proof of stake ethereum casper wrongdoing is not easy … on the other hand it is harder for things to go wrong in fully asynchronous protocols, since failure of a small portion of nodes does not affect things much ….

If proof of stake ethereum casper like PBFT, could you still do parallel processing by running multiple PBFT instances to create small blocks, and feed the resulting small blocks into a higher level PBFT instance to order them into the final block?

R - random number between 0 and sum of all stake, based on previous block s the next validator is the one whose summed balance contains R. We know simplicity matters. What specific practical problems would it cause? Initial explorations on full PoS proposal mechanisms Casper.

Here is a simple one, and the one that is currently used for the sharding validator manager contract: All validators have the same deposit size Suppose there are N active validators.

This has the benefit of being extremely concise to implement and reason about, but has several flaws: There is a conflict between: Currently, I favor this latter scheme. There are two ends of the tradeoff that are obvious: Casper FFG already does this, supporting: There are two other possibilities: With sharding, Casper votes can also serve double-duty as collation headers.

When a block is created, a random set of N validators is selected that must validate that block for it to be possible to build another block on top of that block. At least Proof of stake ethereum casper of those N must sign off. This is basically proof of activity except with both layers being proof of stake; it brings the practical benefit that a single block gives a very solid degree of confirmation - arguably the next best thing to finality - without adding much complexity.

This could be seen as bringing together the best of both worlds between committee algorithms and full-validator-set proof of stake ethereum casper. Currently, I favor 2. Regarding deposit sizes, my latest not too strongly held opinion is that fixed-size deposits beat variable-size proof of stake ethereum casper Multiple fixed-size validators can be ultimately controlled by the same entity.

So from the point of view of the freedom to choose the deposit amount, fixed-size deposits allow for variable-size deposits, just with less granularity. For large deposits the added value of greater granularity dies off quickly, and for small deposits the added value is limited by the minimum deposit size.

By breaking down large validators into smaller equally sized sub-validators we can increase decentralisation and improve incentive alignment. The reason is we can use cryptographic techniques to force a fixed amount of non-outsourceable non-reusable work per identity.

For example, we may be able to enforce proof-of-storage per identity, or maybe proof-of-work per identity using a proof of stake ethereum casper of sequential workor maybe proof of custody can help with outsourceability.

I believe the dfinity project is partly taking the fixed-size deposit approach for this reason. One could try to argue that variable-size deposits are good because they allow for batching and pooling, reducing node proof of stake ethereum casper, hence reducing overheads at the consensus layer.

Hello, I agree with the concept. For solving the second and third points, a logarithmic function would be useful: The last topic is rather optimization and could be implemented later. Ah, I see, you mention: Selecting can be made O 1 for variable deposits. The above scheme achieves a nice efficiency gain, and only slight compromises on private lookbehind: Cool Out of curiosity could you share the math?

What constant did you get? It is a research project at the moment, I will post more on the as we are test the idea …. I agree that fully asynchronous protocols may proof of stake ethereum casper a bit more difficult to debug and if something goes wrong attribution of wrongdoing is not easy … on the other hand it is harder for things to go wrong in fully asynchronous protocols, since failure of a small portion of proof of stake ethereum casper does not affect things much … If you like PBFT, could you still do parallel processing by running multiple PBFT instances to create small blocks, and feed the resulting small blocks into a higher level PBFT instance to order them into the final block?