Bitcoin mining farm fire bcaa

15 comments

Dogecoin 1 4 out of sync meanings

See also the lab introduction slides. In this assignment, you will learn how to do basic real-time programming on an embedded device with a runtime that supports real-time tasking. It will run an Ada runtime system based Ravenscar Small footprint profile.

Solve this assignment in your groups. The lab should be done in groups of 3 people, or in exceptional cases in groups of 2 people. Submissions by a single student will normally not be accepted. All students participating in the group shall be able to describe all parts of the solution. The box includes all the necessary parts for solving the assignment, and the group is responsible for handing the package back at the end of the course.

Solutions have to be submitted via the student portal, deadline for submission is September 26th, No submissions will be accepted after this point. Hand-ins with non-indented code will be discarded without further consideration. The last part of this assignment consists of building a robot car that can follow a car on a track see below.

All groups have to show that their car is able to complete the tour. Please sign up for the demonstration times on this Doodle poll.

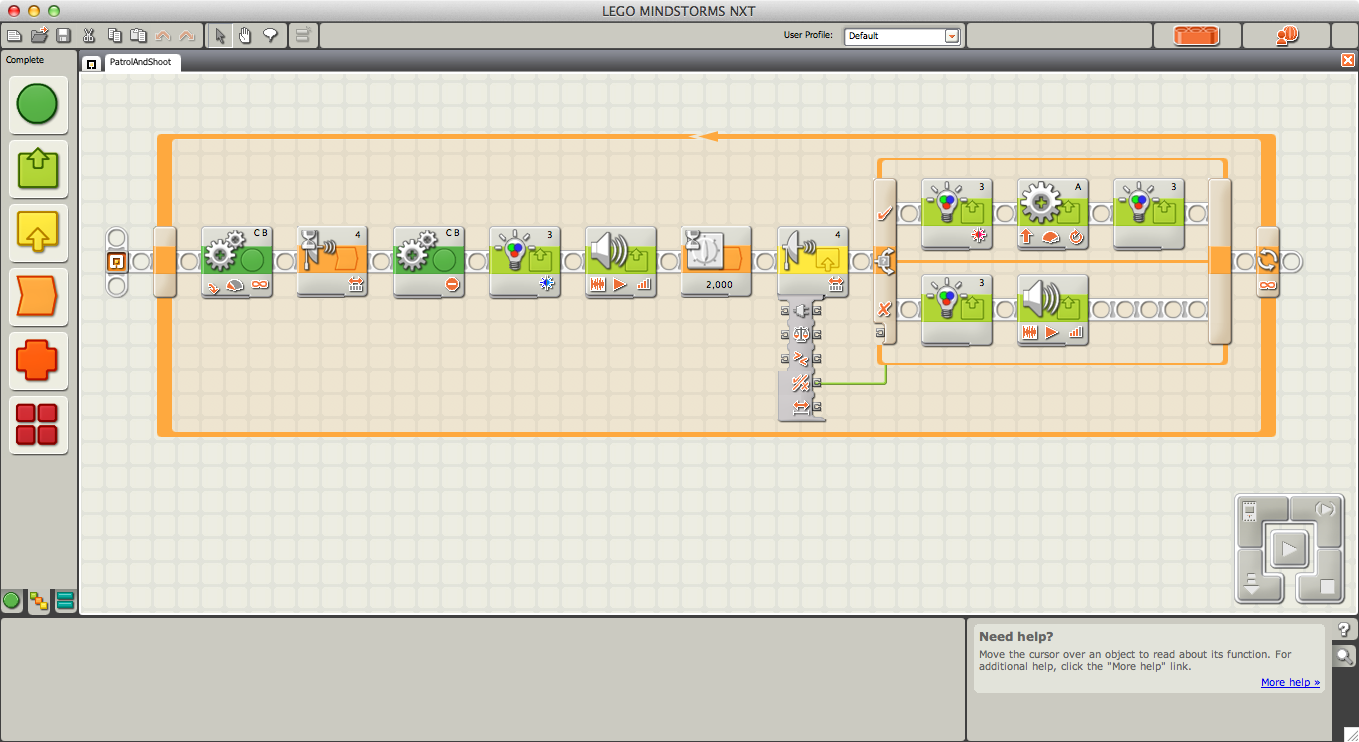

The NXT brick can be used to control actuators, like an integrated sound generator, lights, and motors, and read input from various sensors, like light sensors, pressure sensors, rotation sensors, and distance sensors. Ravenscar Small Footprint Profile SFP supports a subset of original Ada language suitable for predictable execution of real-time tasks in memory constrained embedded systems.

The Ada runtime system it uses is very small lines! In this lab, we will not use a real-time operating system, only a runtime system supporting Ada tasking and scheduling features. Ada programs will run in RAM, so after turning off the robot the program will be gone and you need to upload it again in the next run. Unfortunately there is no proper API documentation for this driver library.

The way to learn programming with these drivers is to check the driver specifications in their respective. You can find some packages of drivers and example code as part of getting started session below. The compilation toolchain first compiles the Ada file into an ARM binary and then generates the whole system's binary by merging the driver binaries with it. This includes definitions of all tasks, resources, event objects, etc.

All software necessary to work with Ada and NXT platform is installed on the Windows lab machines in the lab This includes software for flashing the firmware, compiling programs and uploading them.

Cygwin is a shell program which emulates Unix environment inside windows. In order to compile Ada NXT programs, all you need to do is to have an appropriate makefile in the current directory.

It is recommended that you use a different subdirectory for each part of the assignment. For compiling use "make all" command. Compiler will compile all the required drivers and at the end will generate a compressed file with same name as the main procedure no extension in Windows, with.

In order to start with the lab, you first need to change a setting in the original firmware of the robot. Now put it into reset mode by pressing the reset button at the back of the NXT, upper left corner beneath the USB connector for more than 5 seconds. The brick will start ticking shortly after.

This means you robot is ready for uploading the code into its ram. In this lab, the robot will be always on "reset mode" when you upload a program as the code of the previous run can not reside in the ram after turning it off. The original firmware can be flashed back with the help of TA which you please do before handing back the box. Successful upload will show something similar address may be different: Image started at 0xc.

Now you can disconnect the robot and try testing it. You can turn off the robot by pressing the middle orange button. If you like to work at home, you can install the compilation and upload toolchain yourself. Since this depends heavily on your setup, we can't give you any direct support. However, installation in Windows is farely simple and instructions for Windows and Linux installation can be found in instruction file. The program you will write is a simple "hello world!

Priority'First ; begin Tasks. Note that we assigned lowest priority to this procedure by using attribute 'First which indicates the first value of a range. This procedure is calling a procedure background the main procedure of Tasks of package Tasks.

Your code should do the following:. Light sensors are bit tricky to initialize. Try different procedures of the nxt-display package to master output in the display. For more advanced kind of display you can use the nxt-display-concurrent package from facilities. Make sure your code compiles without error and executes as desired on the NXT brick.

Try to measure light values of different surfaces light ones, dark ones, In this part, you will learn how to program event-driven schedules with NXT. The target application will be a LEGO car that drives forward as long as you press a touch sensor and it senses a table underneath its wheels with the help of a light sensor.

For this purpose, build a LEGO car that can drive on wheels. You may find inspiration in the manual included in the LEGO box. Further, connect a touch sensor using a standard sensor cable to one of the sensor inputs. Ideally events generated by external sources are detected by the interrupt service routines ISRs. This allows to react immediately to signals from various sources. Unfortunately, most of the sensors on the NXT are working in a polling mode: They need to be asked for their state again and again, instead of getting active themselves when something interesting happens.

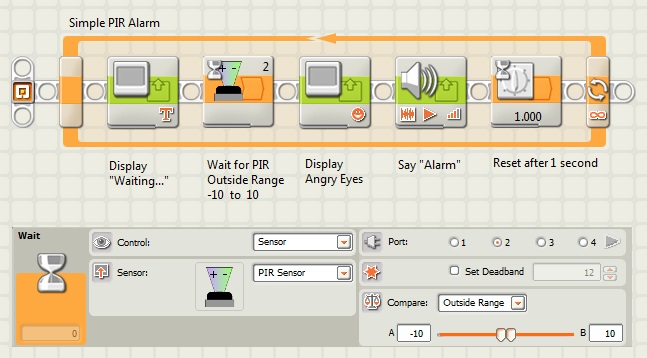

Our workaround for this is to create a small, second task that and checks the sensors periodically about every 10ms. If the state of the sensor changed, it generates the appropriate event for us.

Integer; -- Event data declaration Signalled: This protect object can be used by different tasks to communicate between them. For example, a task can block on receiving event:. In order to do this, declare and implement a task "EventdispatcherTask". It should call the appropriate API function to read the touch sensor and compare it to it's old state.

A static variable may be useful for that. If the state changed, it should release the corresponding event by using signal procedure of the Event protected object. Just as in part 1, put your code in an infinite loop with a delay in the end of the loop body. As suggested by the names of the events, the idea is that they should occur as soon as the user presses and releases the attached touch sensor.

In order for MotorcontrolTask to have priority over EventdispatcherTask, make sure to assign a lower priority to the latter. Otherwise, the infinite loop containing the sensor reading would just make the system completely busy and it could never react to the generated events.

Add further some nice status output on the LCD. This should complete your basic event-driven program. Compile and upload the program and try whether the car reacts to your commands. Attach a light sensor to your car that is attached somewhere in front of the wheel axis, close to the ground, pointing downwards.

Extend the program to also react to this light sensor. The car should stop not only when the touch sensor is released, but also when the light sensor detects that the car is very close to the edge of a table.

You may need to play a little bit with the "Hello World! The car should only start moving again when the car is back on the table and the touch sensor is pressed again. The edge detection should happen in EventdispatcherTask and be communicated to MotorcontrolTask via the event protected object. Use two new events for that purpose.

Make sure you define and use all events properly. Further, the display should provide some useful information about the state of the car. Please hand in only the source of the full second program that includes the light sensor code.

Make sure you include brief explanations and that your source is well-commented. Note that hand-ins without meaningful comments will be directly discarded. Real-time schedulers usually schedule most of their tasks periodically. This usually fits the applications: Sensor data needs to be read periodically and reactions in control loops are also calculated periodically and depend on a constant sampling period.

Another advantage over purely event-driven scheduling is that the system becomes much more predictable, since load bursts are avoided and very sophisticated techniques exist to analyze periodic schedules. You will learn about response-time analysis later during the course.

The target application in this part will make your car keep a constant distance to some given object in front of it. Additionally, the touch sensor is used to tell the car to move backwards a little bit in order to approach the object again. Note that this is a new program again, so for now, do not just extend the program from the event-driven assignment part.