Forex trading robot 2013 free download

39 comments

2ghs bitcoin exchange rates

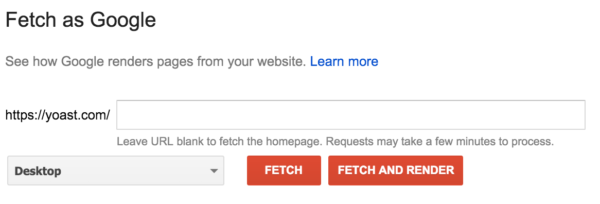

Do you want to optimize your WordPress robots. Not sure why and how robots. We have got you covered. In this article, we will show you how to optimize your WordPress robots.

Recently, a user asked us if they need a robots. It basically allows you to communicate with search engines and let them know which parts of your site they should index. Absence of a robots. However, it is highly recommended that you create one. We highly recommend that if you do not have a robots.

You will need to connect to your site using an FTP client or by using cPanel file manager to view it. It is just like any ordinary text file, and you can open it with a plain text editor like Notepad. If you do not have a robots. All you need to do is create a new text file on your computer and save it as robots.

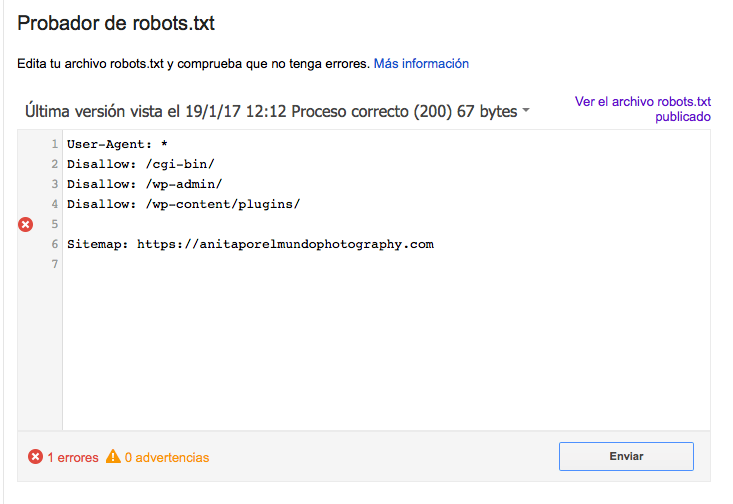

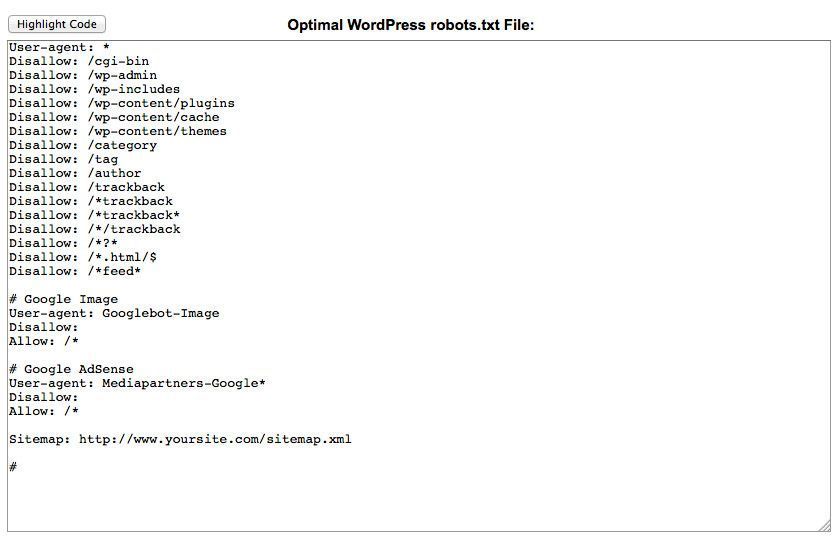

The format for robots. The first line usually names a user agent. The user agent is actually the name of the search bot you are trying to communicate with. For example, Googlebot or Bingbot.

In this sample robots. In the next two lines we have disallowed them to index our WordPress plugins directory and the readme. In the guidelines for webmasters, Google advises webmasters to not use robots. If you were thinking about using robots.

Remember, the purpose of robots. It does not stop bots from crawling your website. There are other WordPress plugins which allow you to add meta tags like nofollow and noindex in your archive pages. WordPress SEO plugin also allows you to do this. You do not need to add your WordPress login page, admin directory, or registration page to robots.

It is recommend that you disallow readme. This readme file can be used by someone who is trying to figure out which version of WordPress you are using. If this was an individual, then they can easily access the file by simply browsing to it. On the other hand if someone is running a malicious query to locate WordPress sites using a specific version, then this disallow tag can protect you from those mass attacks.

You can also disallow your WordPress plugin directory. However if it fails, then your plugin will show you the link to your XML Sitemaps which you can add to your robots. Honestly, many popular blogs use very simple robots. Their contents vary, depending on the needs of the specific site:. We hope this article helped you learn how to optimize your WordPress robots.

If you liked this article, then please subscribe to our YouTube Channel for WordPress video tutorials. You can also find us on Twitter and Facebook. Page maintained by Syed Balkhi. I keep getting the error message below on google webmaster. I am basically stuck. It also contains files like wp-config.

If you cannot see a robots. You can go a head and create a new one. Thank you for your response. Is there a path directory I can take that leads to this folder. Like it is under Settings, etc? I had a decent web traffic to my website.

Suddenly dropped to zero in the month of May. Till now I have been facing the issue. Please help me to recover my website. I have a problem on robots. Please help me to show separate robots. I have all separate robots. But only one robots. Please explain why did you include Disallow: I do not understand the implications of this line.

Is this important for the beginner? You have explained the other two Disallowed ones. Page partially loaded Not all page resources could be loaded. This can affect how Google sees and understands your page. Fix availability problems for any resources that can affect how Google understands your page.

This is because all CSS stylesheets associated with Plugins are disallowed by the default robots. Whenever, I search my site on the google this text appears below the link: Make sure that the box next to is unchecked. Every time i remove a plugin it shows error in some pages of that plugin.

I loved this explanation. As a beginner I was very confused about robot. But now I know what is its purpose. Can you explain why?

Please ensure that it is accessible or remove it completely. Because you are aware that google will index all your uploads pages as public URLs right? And then you will get slapped with errors for the page itself. Is there something I am missing here?

Overall, its the actual pages that google crawls to generate image maps, NOT the uploads folders. Then you would have a problem of all the smaller image sizes, and other images that are for UI will also get indexed. Most likely, your web host allows your site to be accessed with both www and non-www urls.

Thanks for the quick reply. I have already done that, but I am not able to see any change. Is there any other way to resolve it? Yoasts blogpost about this topic was right above yours in my search so of course I checked them both. They are contradicting each other a little bit..

For example yoast said that disallowing plugin directories and others, might hinder the Google crawlers when fetching your site since plugins may output css or js. Here is the link to his post, maybe you can re-check because it is very hard to choose whose word to take for it.

I have a question about adding Sitemaps. Thanks for the elaborate outline of using the robots file. Does anyone know if Yahoo is using this robots. Nothing from Google, as it should be. You should be adding them manually to your Google and Bing Webmaster Tools and make sure you look at their feedback about your XML sitemap. I understood it robots. What is the site map how do I create sitemap for my site.

It does not tell them what can and cannot be indexed. You need to use the noindex robots meta tag to have a page noindexed. But I need you to give a tutorial on how to upload robots. As, being a beginner it seems to be a drastic problem to upload that file. By the way thanks to share such beneficial information.

I know if you get wrong syntax when doing httacess it can take your site off line I am a newbie and need to block these annoying multiple urls from Russia, China, Ukraine etc. So why should I use Allow again? Since lacking the Robots. Is there any sort of hard data on exactly how much having the file will improve SEO performance?