Dogecoin no limit

12 comments

1 how should crypto currencies like bitcoin be regulated

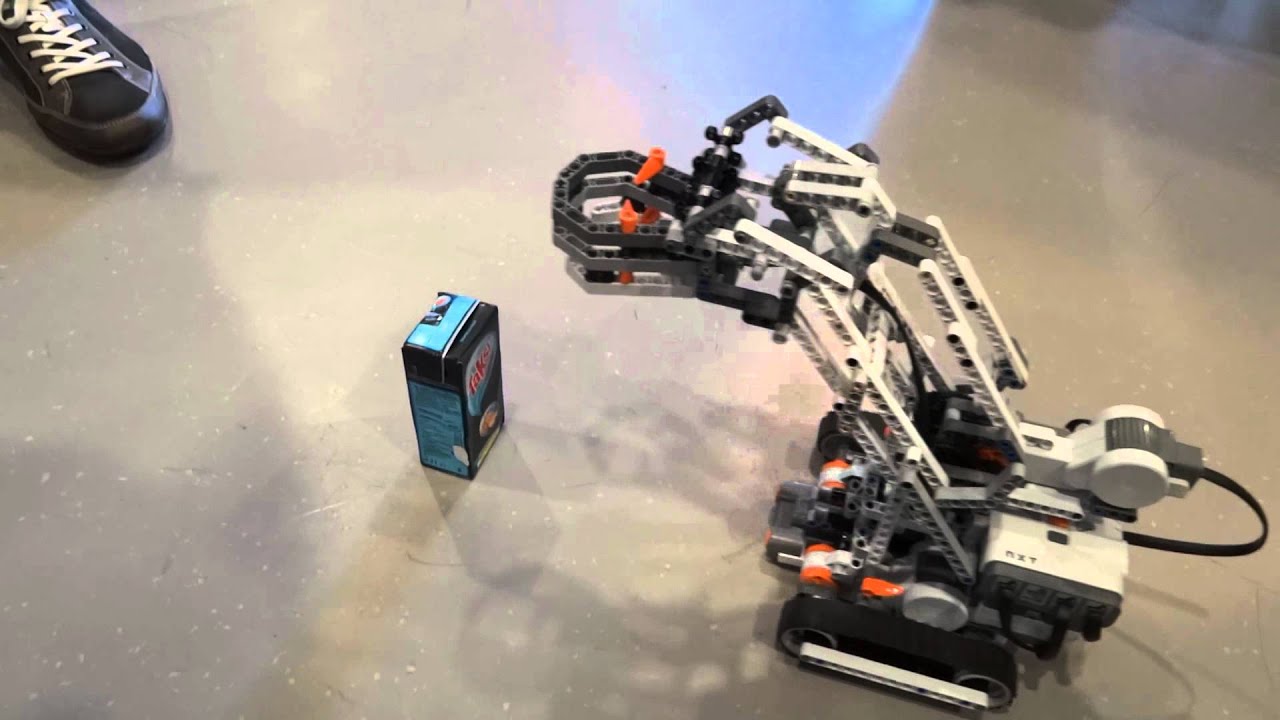

Ever thought of controlling your Lego Mindstorms robot via voice? Even EV3 does not have enough performance to cover that scenario. But with a combination of the latest and greatest voice services, like Amazon Alexa, Google Home or even Cortana, it's possible to control a Lego Mindstorms robot via voice. All code is also available at GitHub , so you can go on and put it onto your own devices.

There is also a video available in German, but you'll get the point. The sample has been built by my friend Christian Weyer and me. The robot was built by Christian's son and continuously updated to grab a cup of fresh tapped beer. If you develop a Skill for Amazon Alexa or an Action for Google Home referring to Skill for both from now on , you'll normally start with putting a lot of your business logic directly into your Skill.

For testing or very simple Skills this is ok'ish , but there's generally one problem: The code will be directly integrated into your skill. So, if you want to extract your code later e. Speaking of a library: That's something you always should do. Encapsulate your code into a little library and provide an API which is used by the Skill to execute the function. By that, you can reuse your library wherever you want.

I guess, that most of the time, you'll already have your business logic somewhere hosted and you want to have an VUI Voice User Interface for that. You speak some words and transform them into API calls to execute the business logic and read the results to the user.

You'll simple build a Skill of every platform you want to support and call your own API for the business logic. Of course, this needs more implementation time, but it's easier for testing, since you can test your business logic by simply calling your API via Postman. And if this works, the VUI is a piece of cake. For the sample on GitHub we used that architecture as well, which looks like this:. In our sample, this API is built in Node. Additionally, a WebSocket server via Socket. The following sample shows the controller for the claw:.

The API Bridge is also auto deployed to Azure whenever a commit to the master branch is pushed, making iteration cycles and testing incredibly fast. The following intents currently only in German are available:. It has a slot called ClawOperation.

A slot is a placeholder within a sentence which can has multiple values depending on the user's desire. In this case, the values of ClawOperation are. The first step in the intent is to check, if we got a slot value. After a certain timeout, the intent will be triggered but without having a value for the ClawOperation slot. In this case, we ask the user again what he want's to do.

If we got a value, we try to map the value to something, the API Bridge will understand. If this is not possible, we respond that to the user who has to start over. If we got the value, we've everything we need to call the API by using the executeApi function:.

The executeApi function is a simple wrapper for request , a Node. Within the skill we can use this. In all successful cases we emit: By that it's possible to issue several voice commands without having to start the Skill for each command. But, if an error happens, we're using: The good part here is, as mentioned in the General Architecture Idea part is, that the Skill is only a Voice to HTTP translator, making it easily portable to other systems.

The last part to make it work is the software for EV3 itself. The first thing we did was to install ev3dev , a Debian Linux-based operating system which is compatible to EV3. At first, we wanted to use Node. Later versions of Chromium and therefore Node. With a broken heart we decided to use Python3 which is also available and supported by ev3dev. Additionally, we needed to install Python Package Index pip , because we needed to download a dependency: First step is to import the client and create a connection.

After that, we can simply use the on -method to connect to a message type and execute the command, when the message was sent to the robot. By that, we wired up all commands. Otherwise it would be closed, making it unresponsive to other commands.

Don't forget to check out the GitHub repository and the video. Quick Object Return helps to return object literals from arrow functions directly without having to use a function body returning the object. WebStorm is my favorite choice when it comes to develop web applications…. Search is done by GhostHunter. Intro Within this blog post we're going to speak about the following agenda: Can be opened and closed to grab the finest refreshments.

To recognise a cup in front of the robot, to know when it should open and close its claw. Well, obviously for driving. General Architecture Idea If you develop a Skill for Amazon Alexa or an Action for Google Home referring to Skill for both from now on , you'll normally start with putting a lot of your business logic directly into your Skill. For the sample on GitHub we used that architecture as well, which looks like this: Runs a predefined program to grab some beer in front of the robot.

The following sample shows the controller for the claw: The following intents currently only in German are available: Can open and close the claw. Can move the robot. Moves the robot forwards. Moves the robot backwards. Runs the predefined program.

The handler for the ClawIntent looks like this: If we got the value, we've everything we need to call the API by using the executeApi function: Having installed everything, we could connect to the server via the following script: